The Future of AI-Powered NPCs in Video Games

It's about to become surreal in the AI-powered gaming space

Non-playable characters, also known widely as "NPCs," were first coined as such in 1975 by the Dungeons and Dragons game. Of course, usage back then was limited to the context of D&D gameplay, but as thousands of games began to include NPCs to enrich their gaming experiences, the term exploded into popularity.

NPCs were meant to serve the purpose of making the gaming world feel real, sociable, and alive. They can play major roles within game narratives by either acting as part of the main narrative or by guiding the player in some shape or form. Since their actions are all computer-controlled, NPCs are limited in their movements and their speech; they act repetitively in both areas, eliminating the excitement of meeting one in-game almost immediately after the first engagement.

While NPCs are rampant across the industry, perhaps some of the most famous examples (ones you'd think of off the top of your head) are found in:

- The Elder Scrolls (Remember the 'I took an arrow to the knee' man in Skyrim?)

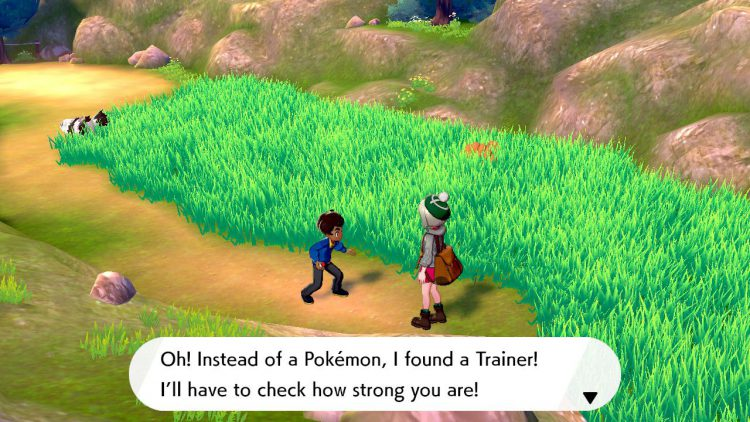

- Pokémon (How could you be the very best trainer, if you're the only trainer?)

- Portal (Who else but GLaDOS?)

- Animal Crossing (Tom Nook, anyone?)

- Undertale (Pretty much all of them are written well)

- Halo (Everyone loves Cortana)

- Zelda (Shoutout to Beedle, who is the subject to fun money glitches at times)

That's just the tip of the iceberg; NPCs have already brought joy to our lives, yet they still have so much more potential to reach. Even today's NPCs - albeit more complicated than in the past - have limitations in both dialogue and activity.

NPCs are fundamentally limited due to restrictions on data sizes in games. Everything - including action paths, movement, or other activities relevant to the game, is "hard-coded". They don't act creatively; they only follow the line of movement coded into them by the game's developers. It's easy to establish "movement patterns" or "loops" of activity or give them a single script to repeat over and over as well. From a player's perspective, it's boring and an NPC's value diminishes instantly. Take, for example, any Pokémon trainer:

The issue is obvious: the trainer stands there in the middle of the path, and if you don't come across its coded "field of vision," they effectively don't do anything. How do they become better trainers if they just stand there? You can see the limitations of trying to simulate a real Pokémon world filled with trainers.

Of course, some NPCs are intentionally structured for the game to progress, such as Navi or Fi from Ocarina of Time and Skyward Sword, respectively. These characters play the role of an "assistant," who is supposed to contribute to the narrative or support the player with various instructions or tasks. In these cases, a hard-coded NPC with narrowly-defined activities makes sense.

But that doesn't stop the larger crowd of NPCs who can still benefit from AI, and thus become more creative. Let's explore the current AI capabilities within NPC development, and then we'll dive into the future.

What's Currently Possible

So, could NPCs possibly start acting like real-life characters instead of robots? In other words, can they form opinions, imagine, talk freely, and develop complex relationships with others? The answer is yes, at least to a strong extent.

What truly kicked off this topic of discussion was an April 2023 research paper published by Stanford and Google Research. Terming the AI-based NPCs as "generative agents," the team created a game environment (a sandbox world, as they called it) to explore the power of characters who could truly simulate human-like behavior in a creative manner.

The results were eye-opening. Even with narrowly-defined prompts, user agents (characters) began forming opinions and participating in the sandbox world. They interacted with others creatively in full language, and their social behaviors felt natural. If you're interested, I urge everyone to take a look at the paper. It's jaw-dropping how powerful "generative agents" are.

Of course, heavy prompt engineering needs to happen, and AI bots must fantasize and act creatively like humans. If we want to establish AI-based NPCs at a much larger and applicable scale where anyone can incorporate these technologies in their game, then we're almost there.

As of the writing of this article, there already exists several well-funded AI startups that are tackling this opportunity. Look no further than companies like Inworld.AI or Character.AI, who are exploring the use of LLMs to develop game characters like never before.

Many AI developers use the OpenAI API and build complex generative agents themselves as the researchers in the above paper did, but to what scale? We have to think about latency, performance, and cost. When I built my own AI-based character who could talk in a visual novel setting, based on a given prompt, there were two issues that arose immediately:

- Latency for each AI calculation, since the API call needs to be filled through the internet, as it's not built in-game.

- The characters can "over-think" - meaning they could mention concepts, ideas, or other nouns that have nothing to do with one's game or context.

You can see these issues in action in the demo video of my game:

My Visual Novel demo, where I have two characters talk to each other based on narrowly-defined prompts. The girl on the left is trying to indirectly stop the girl on the right from continuing her experiment, as it may have long-term negative impacts on the world. Source: Author

The Future

Players and developers alike have been exploring NPC behavior for years: what movement patterns they follow, what conversations they have, and how they support the game narrative. The introduction of actual AI to simulate real-life social behaviors will absolutely revolutionize gaming - especially open-world RPGs which are loaded with NPCs to fill their worlds.

We're not just talking about conversations or activity among only NPCs; the player's own character could be subject to experimentation as well. These could include requests for the player to input their own greetings or such to NPCs, or to add their own prompts that can give their character an appropriate personality. From there, the player's own character could act on their own when the player is AFK (away from the keyboard), converse with other characters by themselves (and creatively), or even talk back to the player like they're another person. You see where I'm going here - the possibilities seem endless.

It won't be long before large AAA studios - even traditional powerhouses such as Nintendo - start adopting complex AI NPCs to join their game worlds. Of course, such an undertaking would involve a gradual adoption process, with millions of hours spent on experimentation in between, but without a doubt, it's doable and realistic.

Let's see what studios and AI platforms have in store for us gamers (and developers!) in gaming worlds that continue to become more social and immersive. And with AI models improving in quality and performance every year, we'll get there in no time.